Key Points

- Nvidia’s Blackwell AI Chips, which are 30 times faster than their predecessors, are overheating in server racks designed for up to 72 chips.

- Nvidia has requested several changes to rack designs to resolve the problem.

- The overheating adds to delays that could affect major customers like Meta, Google, and Microsoft.

- Cloud service providers worry about meeting data center timelines. Nvidia is working closely with suppliers and customers to address the issue.

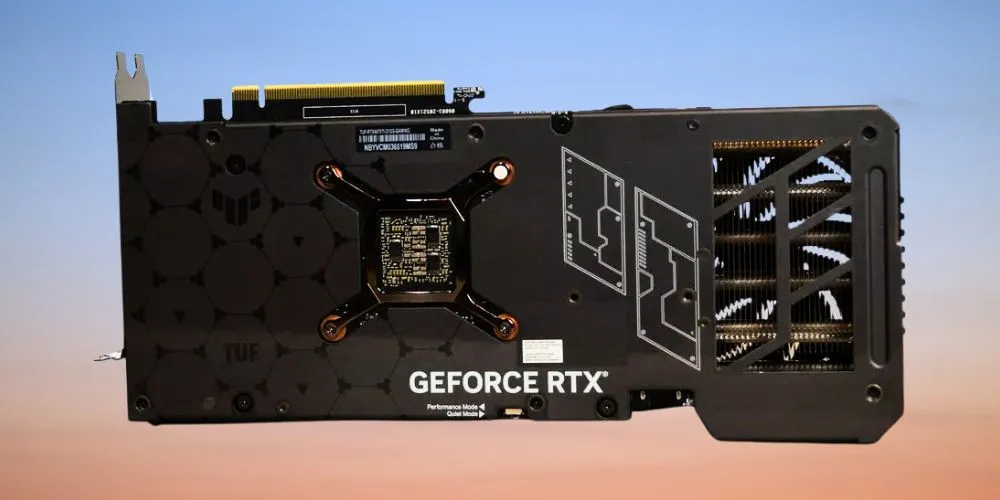

Nvidia’s highly anticipated Blackwell AI chips, which have already experienced shipping delays, are now encountering overheating issues in servers. The Information reported on Sunday that this raises customer concerns about data center readiness. Overheating occurs when the Blackwell graphics processing units (GPUs) are connected in server racks designed to accommodate up to 72 chips.

According to employees, customers, and suppliers familiar with the situation, this issue has led Nvidia to request multiple design modifications from its suppliers to change the design of the racks several times to address the problem. A spokesperson for Nvidia stated: “Nvidia is working with leading cloud service providers as an integral part of our engineering team and process. The engineering iterations are normal and expected.”

Nvidia first unveiled the Blackwell chips in March, describing them as a significant leap in performance. The chips combine two silicon squares from the company’s previous designs into a single component up to 30 times faster for AI tasks such as powering chatbot responses. Initially slated for shipment in the second quarter, the chips have faced delays that could impact major customers, including Meta Platforms, Alphabet’s Google, and Microsoft.

Overheating has heightened concerns among cloud service providers about potential delays in establishing new data centers. These customers rely on Nvidia’s cutting-edge technology to support their AI workloads, and any prolonged delays could hinder their expansion plans.

Nvidia’s engineering teams collaborate with cloud service providers to refine the server rack designs and mitigate overheating concerns. However, the repeated adjustments underscore the challenges of deploying next-generation AI infrastructure at scale. Despite the setbacks, Nvidia remains a leader in AI chip technology, and once operational, the Blackwell GPUs are expected to set new benchmarks for speed and efficiency.