Key Points:

- Meta revised its labeling policy to differentiate between lightly edited and fully AI-generated images.

- Creators highlighted issues with real photos that were mislabeled due to minor edits. Recognizing these issues, Meta decided to stop conflating.

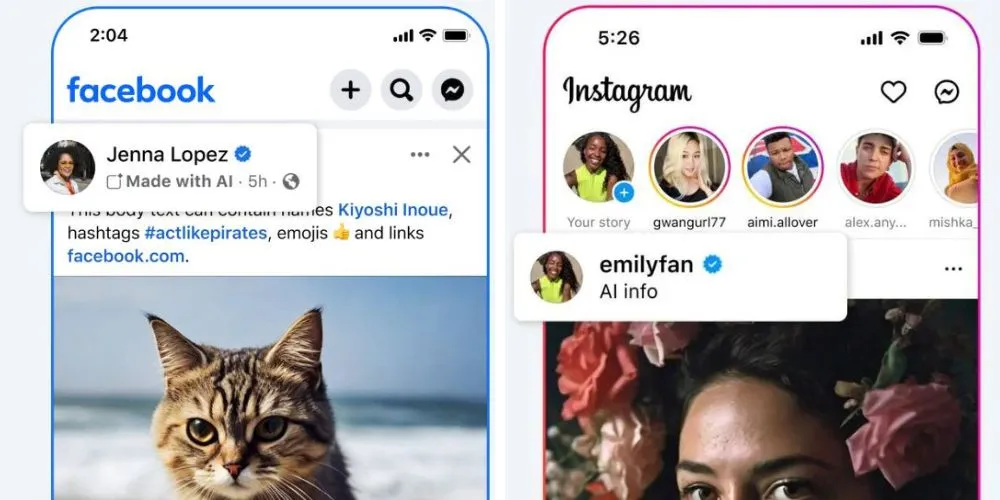

- The label has been updated from “Made With AI” to “AI info” for better clarity and context. Meta continues integrating AI tools across its platforms.

- The reliance on C2PA metadata for detection was not foolproof, as it could be easily removed or bypassed.

Meta has revised its “Made With AI” labeling policy after numerous user images were incorrectly flagged as AI-generated when real photos were edited with basic software. This adjustment aims to provide more accurate and contextually appropriate labels for edited images.

Previously, Meta’s automated systems would flag content as AI-made simply because it had been lightly edited in Adobe Photoshop. Photoshop exports include metadata indicating the use of some generative AI features, which led Meta to label such images as “Made With AI.” However, once flagged, creators couldn’t remove this label, causing confusion and frustration.

For example, content creators like YouTuber Justine “iJustine” Ezarik experienced issues where minor edits resulted in their images being tagged as AI-generated. Ezarik pointed out this discrepancy in a Threads post, emphasizing that her real photos were incorrectly labeled due to minor adjustments.

Recognizing these issues, Meta decided to stop conflating AI edits with fully AI-generated images. The company acknowledged that its labels often didn’t align with user expectations and lacked sufficient context. Meta’s update, guided by its Oversight Board, aims to address this problem by changing the label from “Made With AI” to “AI info.” Users can now click on this label for more detailed information.

Meta’s push for more AI tools across its platforms includes the launch of “Meta AI” in Instagram and WhatsApp, allowing users to alter photos with AI in-app. The initial plan to automatically detect and label AI content, announced in February, relied heavily on the assumption that all AI-generated or AI-altered images would contain a standard called C2PA, a type of metadata that labels AI content.

However, this approach was not foolproof. While Adobe could easily attach AI metadata to exported content, even if lightly retouched, it was also simple to remove this metadata. Users could take screenshots or re-export images through different programs to erase the AI metadata, bypassing Meta’s detection system.

There is no perfect solution for detecting AI images online. Internet users must remain vigilant and learn to identify clues that suggest an image might be AI-generated.