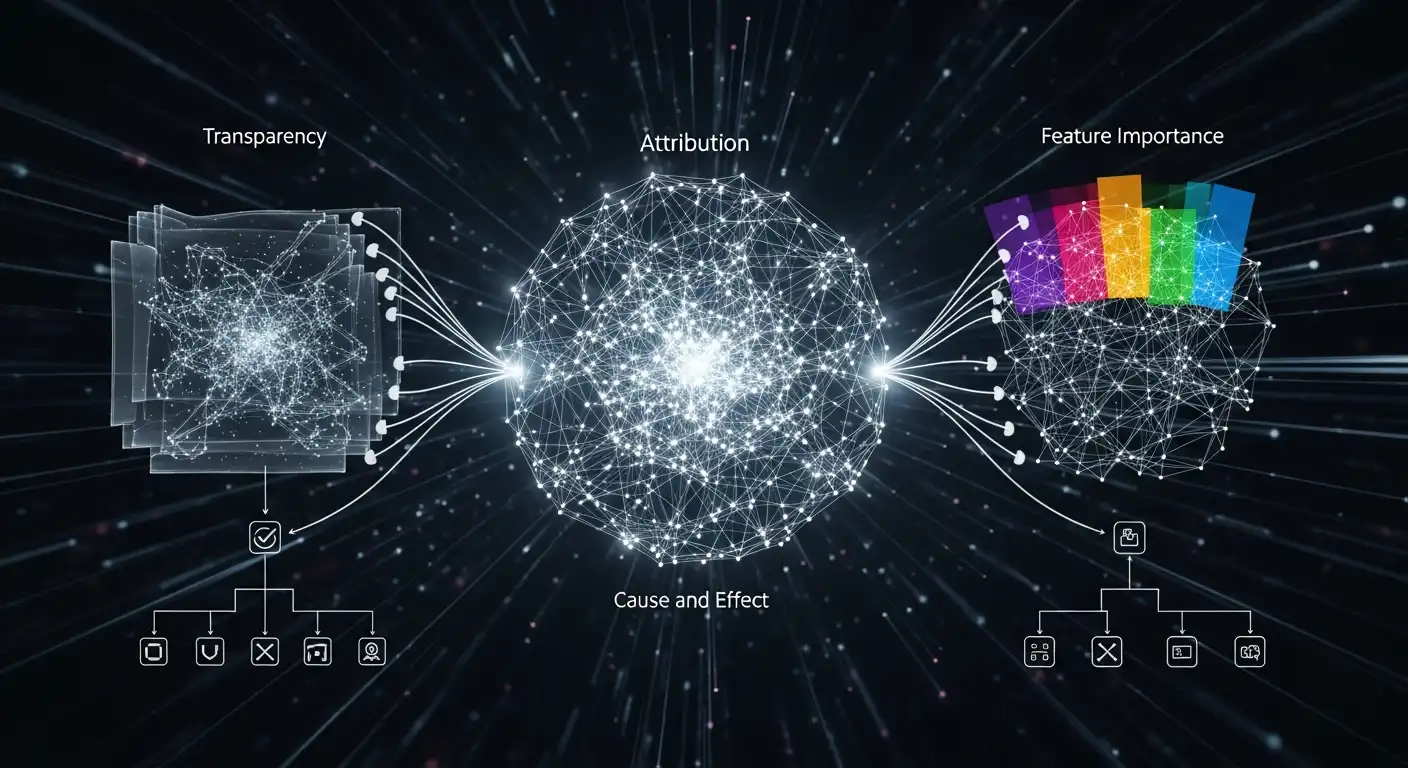

As Artificial Intelligence (AI) continues to permeate diverse aspects of our lives, the demand for transparency and accountability in AI systems has led to the emergence of Explainable AI (XAI). In contrast to traditional “black box” AI models, XAI aims to demystify AI algorithms’ decision-making processes, providing insights into how and why a particular decision was reached. The pursuit of explainability is not just a technological nuance but a crucial step towards building trust, ensuring ethical use, and fostering wider adoption of AI technologies.

Decoding Complex Algorithms

AI algorithms often operate as complex, intricate models that deliver accurate results but lack transparency in their decision-making. Explainable AI seeks to decode these models, making their decision processes understandable to human users. This transparency is especially crucial in high-stakes applications, such as healthcare, finance, and autonomous vehicles, where decisions can have significant real-world consequences.

Trust and Ethical AI

The opaqueness of AI models can lead to skepticism and mistrust among users and stakeholders. Explainable AI is a bridge that fosters trust by clearly explaining how AI arrives at its decisions. This transparency is essential not only for end-users but also for regulatory bodies and policymakers who seek to ensure ethical use and adherence to guidelines in the rapidly evolving landscape of AI applications.

Interpretability vs. Accuracy

Explainability comes with challenges, particularly when balancing it with the pursuit of accuracy. There is often a trade-off between highly accurate but complex models and simpler, more interpretable ones. Striking the right balance is delicate, as models must be both accurate and understandable. Explainable AI methodologies aim to navigate this balance, ensuring that the insights provided are meaningful without sacrificing AI prediction accuracy.

Empowering Decision-Makers

Explainable AI empowers human decision-makers to collaborate effectively with AI systems. Users can make more informed decisions when they understand the reasoning behind AI-generated recommendations, especially in domains such as medical diagnosis and financial forecasting. This collaborative approach ensures that AI is not seen as a standalone decision-maker but as a valuable tool that augments human expertise.

Conclusion

Explainable AI is a pivotal development in the evolution of artificial intelligence. It addresses the need for transparency, accountability, and trust in AI systems. As AI continues to integrate into various facets of society, the ability to explain complex models becomes paramount. Explainable AI builds bridges between technology and users, fostering a collaborative relationship in which humans and AI work together toward informed, ethical, and responsible decision-making.