Four researchers, Nurgali Kadyrbek, Madina Mansurova, Adai Shomanov, and Gaukhar Makharova, from Al-Farabi Kazakh National University and Nazarbayev University, have proposed a Kazakh Language Speech Recognition model using a Convolutional Neural Network (CNN) with Fixed Character Level Filters.

Unveiling Kazakh Language Transcription Challenges

This research is dedicated to the intricate task of transcribing human speech in the ever-evolving context of the Kazakh language. It delves into pivotal aspects encompassing the phonetic structure of the Kazakh language, the technical intricacies involved in curating a transcribed audio corpus, and the transformative potential of deep neural networks in speech modeling. The focal point is enhancing the transcription process amidst dynamic language shifts, enabling a more precise and efficient understanding of spoken Kazakh language.

Curating an Invaluable Transcribed Audio Corpus

Central to the research is the meticulous assembly of a high-quality decoded audio corpus boasting 554 hours of data. This comprehensive corpus goes beyond mere transcription, shedding light on the frequencies of letters and syllables. Moreover, it enriches the understanding of native speakers through demographic parameters like gender, age, and regional residence. This universal vocabulary-laden corpus emerges as a cornerstone resource, poised to fuel the development of speech-related modules. The convergence of linguistic insights and technical prowess paves the way for an expansive and invaluable dataset.

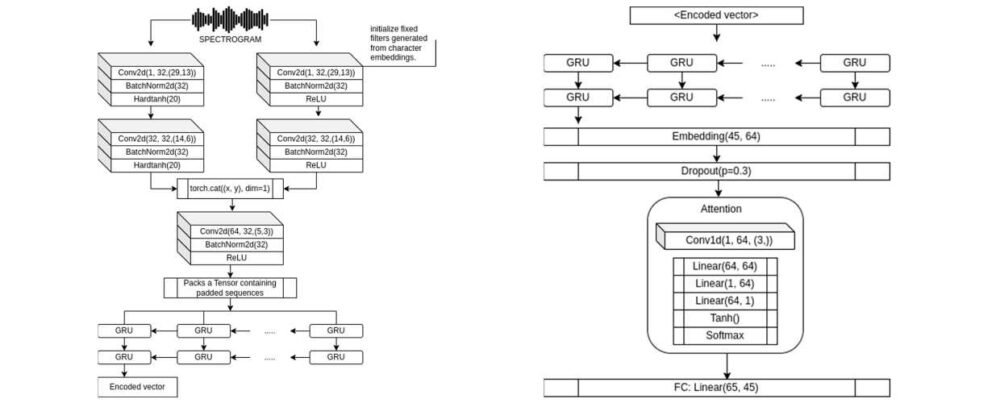

Raising the Bar with Enhanced Speech Recognition

The research journey transcends curation and ventures into pioneering speech recognition models. The DeepSpeech2 model takes center stage, characterized by its sequence-to-sequence architecture, including an encoder, decoder, and an attention mechanism. Innovatively, the model is fortified by introducing filters initialized with symbol-level embeddings. This strategic augmentation diminishes the model’s dependence on precise object map positioning. The training process becomes an orchestration of concurrent preparations for convolutional filters for spectrograms and symbolic objects. The outcome is a recalibrated model showcasing a remarkable 66.7% reduction in weight, all while preserving relative accuracy. Evaluation of the test sample yields a 7.6% lower character error rate (CER) compared to prevailing models, underscoring its avant-garde characteristics.

The research emerges as a triad of accomplishments. It is a testament to the creation of a superior audio corpus, a reimagined speech recognition model, and groundbreaking outcomes extending beyond Kazakh language. The architecture’s capacity to operate within limited resource platforms ignites potential for practical deployment. As the tapestry of language dynamics is navigated, this research reverberates across speech-related applications and languages, transcending boundaries and opening doors to a new frontier of linguistic and technological possibilities.