Key Points

- OSU researchers enhanced AI wildlife identification accuracy using fewer, well-curated images.

- The AI model achieved 90% accuracy with just 10,000 training images focused on bighorn sheep.

- Training on a single species with varied background environments improved results at novel sites.

- This approach reduces the need for large datasets, cutting energy use and computing costs.

Scientists at Oregon State University (OSU) have significantly improved the ability of artificial intelligence to identify wildlife species from motion-activated camera images. Their innovative method, which embraces a “less-is-more” training data approach, makes wildlife monitoring more accurate and cost-effective.

Traditionally, wildlife researchers collect large volumes of camera trap images, which are then manually reviewed—a time-consuming and inefficient process. While AI models can help automate image classification, they often struggle with accuracy, especially when analyzing photos from unfamiliar environments.

“One of the biggest limitations of current AI systems is their low accuracy in novel locations, where the model hasn’t been trained,” said co-author Christina Aiello, a research associate at OSU’s College of Agricultural Sciences.

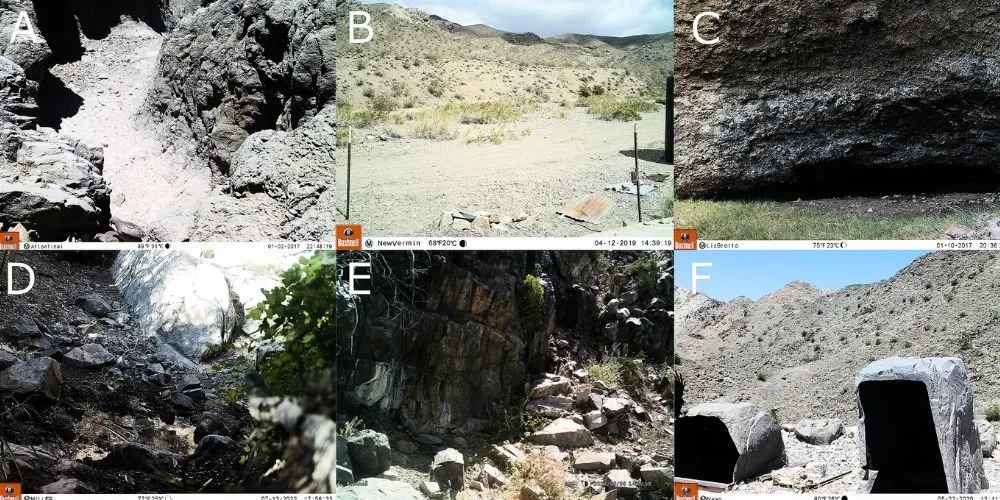

Led by undergraduate Owen Okuley, the research team discovered that focusing AI training on a single species, in this case, bighorn sheep, while ensuring a diverse range of environmental backgrounds significantly improved the results. The findings were published in Ecological Informatics.

By training the AI model with only 10,000 curated images, the team achieved nearly 90% accuracy, comparable to other models trained on much larger datasets. This streamlined approach not only improves performance but also reduces the computational power and energy required, an important consideration in conservation work.

“We found that it’s not about having more data, but about having the right variety of data,” Aiello said. “The key was narrowing our focus while still incorporating enough visual diversity.”

The project originated from OSU’s Fisheries and Wildlife Undergraduate Mentoring Program, where Okuley was paired with Aiello and later joined Professor Clinton Epps’ lab. Okuley conducted fieldwork, managed camera trap data, and eventually led his own AI research study.

After graduating this June, Okuley will begin a Ph.D. at the University of Texas at El Paso, where he aims to build AI tools capable of identifying individual waterfowl species and their hybrids based on distinct traits.

The study also included collaborators from Johns Hopkins University, the California Department of Fish and Wildlife, and the National Park Service.