For over a century, the language of filmmaking has been written in the physical world. It was a language of location scouts battling the elements, of massive, painstakingly constructed sets, of actors trying to conjure emotion while staring at a sterile green screen, and of the solemn promise to “fix it in post.” This traditional pipeline, despite producing all its masterpieces, is a linear, often inefficient, and profoundly disconnected process. It separates the creative vision from the technical execution, forcing directors and actors to fly blind, making multi-million dollar decisions based on imagination and hope. But as we accelerate toward 2026, a new cinematic language is not just being written; it is being rendered in real-time. This is the language of Virtual Production (VP).

The year 2026 will be remembered as the inflection point where Virtual Production ceased to be a novel, high-cost experiment reserved for sci-fi blockbusters and became a foundational, democratizing force across the entire spectrum of film and entertainment. It is a paradigm shift as profound as the advent of sound or the transition from film to digital. VP is not merely a better green screen; it is a holistic methodology that merges the physical and digital worlds on set, in-camera, and in real-time. Powered by the hyper-realistic graphics of game engines, the immersive glow of massive LED walls, and a suite of interconnected technologies, Virtual Production is collapsing the traditional filmmaking pipeline, empowering creators with unprecedented control and unlocking a new golden age of visual storytelling. This is not just the future of VFX; it is the future of filmmaking itself.

Deconstructing the Revolution: What is Virtual Production?

Before we can project its impact in 2026, we must first define what Virtual Production truly is. It is a broad umbrella term that encompasses a host of technologies and techniques, but at its heart, it is a philosophy of real-time creativity and iteration. It is about moving the post-production process into pre-production and on-set production, allowing filmmakers to see their final, pixel-perfect shots live, in-camera, while they are shooting.

This is a fundamental departure from the delayed gratification and guesswork of traditional VFX, where the true look of a shot might not be known until months after filming has wrapped.

Beyond the Green Screen: The Core Principles of VP

The key difference between Virtual Production and traditional green screen techniques lies in their interactive and immediate nature. A green screen is a passive canvas; an LED Volume is an active, dynamic environment.

These core principles are what transform VP from a simple background replacement tool into a revolutionary creative methodology.

- Real-Time Rendering: This is the engine that powers VP. By utilizing powerful game engines like Unreal Engine or Unity, photorealistic 3D environments are rendered at incredibly high frame rates. This means any changes made to the digital scene—the lighting, the position of an object, the time of day—are seen instantly.

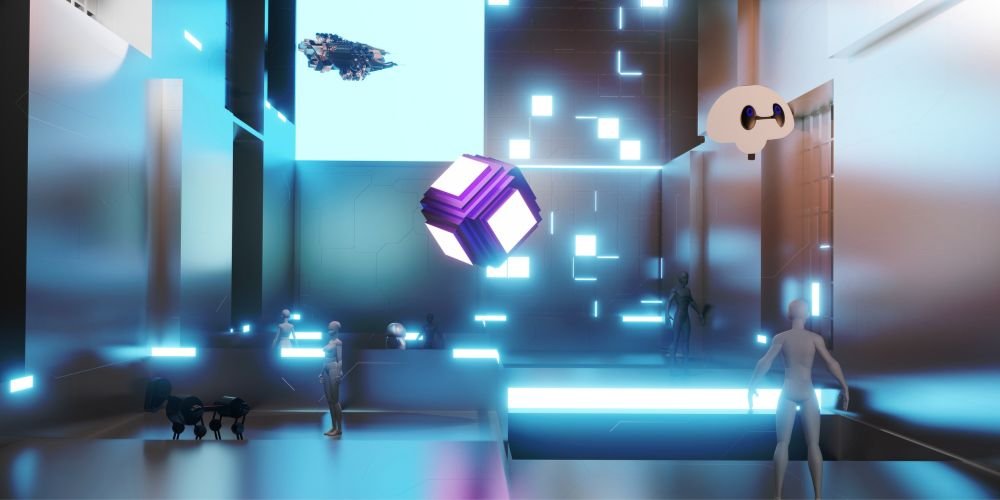

- In-Camera Visual Effects (ICVFX): This is the most visible application of VP. By filming actors and physical set pieces in front of a massive, curved LED wall (often referred to as “The Volume”), which displays a real-time rendered environment, the final visual effects are captured directly by the camera. The background, lighting, and reflections are all real and have been baked into the shot.

- Parallax and Camera Tracking: To create the illusion of a real 3D space, the camera’s exact position and movement in the physical world are tracked with pinpoint accuracy. This tracking data is fed into the game engine, which updates the digital background’s perspective in real-time. As the camera moves, the background moves with it, creating a perfect parallax effect that tricks the eye into seeing a deep, three-dimensional world.

- Simul-cam and Augmented Reality (AR) Overlays: In outdoor or large-scale settings, VP can be used to superimpose digital elements over a live camera feed. A director can look at a monitor and see the real actors in a real location, but with a digital creature or a futuristic city seamlessly integrated into the shot in real-time, allowing them to frame and direct the scene with reimagined context.

Reimagined Workflow: The Virtual Production Pipeline

Virtual Production doesn’t just change the technology on set; it completely reorders the traditional, linear filmmaking process. It is a more circular, iterative, and collaborative workflow that front-loads creative decisions.

This new pipeline empowers every department to collaborate from the earliest stages, ensuring a unified creative vision.

- Phase 1: World Building and Previsualization (Previs): This phase becomes vastly more important. Instead of simple storyboards, teams build the entire digital world of the film in a game engine. Directors, cinematographers, and production designers can then “walk around” this virtual world using VR headsets, planning every shot, testing camera lenses, and finalizing the lighting months before a single actor steps on set.

- Phase 2: On-Set Production with The Volume: The digital assets created in previs are loaded onto the LED Volume. The cast and crew arrive on a set where the final background is already visible and interactive. The director sees the final shot in their monitor, the actors can react to a tangible environment, and the cinematographer can light the scene using the LED wall itself as the primary light source.

- Phase 3: Streamlined Post-Production: Since the vast majority of visual effects are captured in-camera, the post-production process is significantly simplified. The work shifts from complex compositing and rotoscoping to more refined tasks, such as color grading, minor clean-up, and integrating any digital characters or elements that couldn’t be captured live.

The Technological Pillars of the VP Ecosystem

The Virtual Production revolution is being built upon a sophisticated stack of interconnected technologies. By 2026, these individual components will have matured, become more integrated, and in some cases, more accessible, forming a robust and powerful ecosystem for creators at all levels.

It is the seamless integration of these pillars that creates the magic of real-time, in-camera visual effects.

The Game Engine Ascendant: The Heart of Real-Time Creation

At the absolute center of the VP universe is the game engine. Originally designed to create interactive video games, these software platforms have evolved into remarkably powerful and photorealistic world-building tools.

The distinction between a “game engine” and a “filmmaking suite” will have all but vanished.

- Unreal Engine 5 and Beyond: Epic Games’ Unreal Engine has become the de facto standard for high-end virtual production. Features like Nanite (which allows for near-infinite geometric detail) and Lumen (a dynamic global illumination and reflections system) have made it possible to create digital worlds that are virtually indistinguishable from reality. By 2026, Unreal Engine will be even more optimized for cinematic workflows, with improved real-time ray tracing, more intuitive tools for filmmakers, and deeper integrations with professional camera and lighting equipment.

- Unity and Other Specialized Engines: While Unreal Engine dominates, Unity is also a powerful player, particularly in the realms of AR and mobile-driven VP. We will also see the rise of more specialized, proprietary engines developed by major studios and VFX houses, each tailored to their specific pipeline needs.

The LED Volume: A Dynamic Window into Any World

The LED Volume is the iconic centerpiece of modern Virtual Production. It is a large, curved soundstage lined with high-resolution LED panels on the walls and ceiling, creating a fully immersive and controllable environment.

By 2026, LED Volumes will become more advanced, more common, and will come in a wider variety of sizes and configurations.

- Pixel Pitch and Color Accuracy: The quality of an LED Volume is determined by its “pixel pitch” (the distance between pixels). As this distance shrinks, the resolution increases, thereby reducing artifacts such as moiré patterns. The standard for high-end volumes will be sub-2mm pixel pitches, and color science will have advanced to the point where the panels can perfectly replicate the color gamuts of high-end cinema cameras.

- Modular and Mobile Volumes: While the first generation of Volumes was massive and permanent, 2026 will see a rise in smaller, modular, and even mobile LED stages. This will enable productions to leverage the power of VP on smaller soundstages or even at specific locations, thereby increasing flexibility.

- Interactive Lighting: The LED panels are not just a background; they are the primary light source. They cast realistic, dynamic light and reflections onto the actors, props, and physical set pieces. A cinematographer can change the time of day from high noon to a golden-hour sunset with the press of a button, and the lighting on the actors will change instantly and realistically. This level of creative control is impossible with a green screen.

The Unseen Links: Real-Time Tracking and Data Integration

For the illusion to work, the digital world on the LED screens must respond perfectly to the movements of the physical camera. This is achieved through a sophisticated network of sensors and tracking systems.

By 2026, these tracking systems will become more robust, more accurate, and easier to calibrate.

- Camera Tracking Systems: The most common method is optical tracking, using infrared cameras mounted around the stage to track passive or active markers on the film camera. This data provides the exact position and orientation of the camera in 3D space, which is fed to the game engine dozens of times per second.

- Lens Calibration: It’s not enough to know where the camera is; the system also needs to know exactly what the camera is seeing. This requires a precise digital map of the lens’s characteristics, including its focal length, focus distance, and lens distortion. By 2026, this calibration process will become highly automated.

- Motion and Performance Capture (Mocap): For scenes involving digital characters, motion capture technology is seamlessly integrated into the VP workflow. An actor in a motion capture (MOCAP) suit can perform on the physical set, and their movements can be translated into a digital character within the game engine in real-time. This allows the director to see and direct the final performance of the digital character live on set.

The Digital Backlot: The Rise of Reusable, Photorealistic Assets

One of the most profound long-term impacts of VP is the creation of a “digital backlot.” Instead of building a massive physical set for a New York City street, using it for one film, and then tearing it down, a studio can now build a photorealistic digital version of that street.

This digital asset can then be reused, modified, and improved upon for countless future productions, creating immense long-term value.

- Photogrammetry and Asset Libraries: The creation of these digital worlds is fueled by techniques like photogrammetry (creating 3D models from photographs) and vast asset libraries like Quixel Megascans (owned by Epic Games). These libraries contain hundreds of thousands of ultra-realistic, film-quality 3D models of everything from rocks and trees to entire buildings.

- The Metaverse Connection: The high-quality digital assets created for films and TV shows in 2026 will be directly portable to other interactive experiences, such as video games, VR applications, and the burgeoning metaverse, creating a new, interconnected ecosystem of digital content.

The AI Co-Pilot: Generative AI’s Emerging Role in World Building

While still in its early stages, the integration of Generative AI into the VP pipeline is expected to be one of the most exciting developments by 2026. AI will act as a powerful creative assistant, accelerating the world-building process.

Generative AI will not replace artists, but will give them superpowers, allowing them to iterate on ideas at an unprecedented speed.

- Concept Art and Environment Generation: A director or artist can use text or sketch-based prompts to generate hundreds of variations of a landscape, a building, or an entire city in minutes.

- Automated 3D Asset Creation: AI tools will be able to take a 2D image or a simple description and generate a fully-textured, production-ready 3D model, dramatically speeding up the asset creation pipeline.

- Digital Humans and AI-Driven Extras: The creation of believable digital humans is one of the final frontiers of VFX. By 2026, AI is expected to play a significant role in creating realistic skin textures, hair simulations, and even subtle facial animations. It will also be used to populate large digital scenes with AI-driven crowds of background extras who can react realistically to the action.

A New Creative Canvas: How VP is Reshaping the Art of Filmmaking

Virtual Production is more than just a collection of technologies; it is a catalyst for a new creative methodology. It fundamentally changes the “feel” of being on a film set and re-empowers key creative roles in ways that were lost in the green screen era.

By 2026, the creative benefits of VP will be as important a driver of its adoption as the financial and logistical ones.

“Fix it in Pre”: The Power of Iteration Before the Clock is Ticking

The old filmmaking adage was “fix it in post.” The new mantra is “fix it in pre.” The ability to build and explore the entire film world virtually before a single frame is shot is a game-changer.

This front-loading of creative decisions de-risks the entire production process, unlocking greater creative freedom.

- Virtual Scouting: Instead of flying a crew around the world, a director can use a VR headset to scout for locations within their digital world. They can test different camera angles, see what the light will look like at different times of day, and make crucial creative decisions from the comfort of a virtual studio.

- Collaborative Previsualization: Previously, previsualization was a specialized process performed by a separate team. In the VP pipeline, every department head—from the director and cinematographer to the production designer and costume designer—can be in the virtual world together, collaborating and iterating on the look and feel of a scene in real-time.

Empowering Actors and Directors: The Tangible Digital World

One of the biggest complaints about green screen filmmaking is that it can be alienating for actors. They are asked to deliver powerful, emotional performances while reacting to tennis balls on sticks in a sea of sterile green.

The LED Volume brings the world back to the actor, leading to more grounded and believable performances.

- An Immersive Environment: On a Volume set, an actor can see the breathtaking alien landscape or the bustling medieval city they are supposed to be in. This provides immediate context, allowing them to interact with their environment naturally.

- Real Eye Lines: Actors can make direct eye contact with elements in the background, which is impossible on a green screen. This small detail makes a huge difference in the final performance and eliminates the “dead-eyed” look that can sometimes plague VFX-heavy scenes.

- Director’s Clarity: The director is no longer imagining the shot; they are seeing it. They can provide more precise feedback to the actors and the camera operator because they are viewing the final, composited image on their monitor.

The Cinematographer’s New Canvas: Interactive Light and Reflection

For a Director of Photography (DP), a green screen set is a lighting nightmare. They have to try to simulate the light that will be cast by the digital environment that will be added months later.

The LED Volume turns the environment into the DP’s most powerful lighting tool.

- Naturalistic Lighting: The light cast by the LED panels onto the actors is photorealistic because it is the light from the environment. The soft, ambient glow of a twilight sky or the harsh, flickering reflections from a futuristic cityscape are all created naturally and captured in-camera.

- Golden Hour on Demand: One of the most famous benefits of VP is the ability to control time. A traditional outdoor shoot is a race against the sun, with the beautiful “golden hour” light lasting for only a few minutes. In a Volume, the DP can set the sun to golden hour and keep it there for a 12-hour shooting day.

The Ripple Effect: VP’s Expansion Across the Entertainment Spectrum

While high-end feature films have been the most visible showcase for Virtual Production, their influence by 2026 will have spread far and wide across the entire entertainment landscape. Its unique combination of speed, flexibility, and quality makes it a perfect fit for a variety of media formats.

VP is becoming the go-to solution for any production that requires creating high-quality visuals on a tight schedule and with a high degree of creative control.

Episodic Television: Cinematic Quality on a Broadcast Schedule

The success of shows like The Mandalorian and House of the Dragon has proven that VP is the key to delivering feature-film-level visual effects on the compressed schedules and budgets of episodic television. By 2026, it will be the standard for high-concept sci-fi, fantasy, and historical dramas. It allows a TV show to convincingly travel to a new alien planet or a different historical era every week without the prohibitive cost and logistical nightmare of building massive sets or flying the crew to exotic locations.

Advertising and Commercials: Ultimate Flexibility and Brand Control

The fast-paced world of advertising is a perfect fit for Virtual Production. A single LED Volume can be used to shoot commercials for a dozen different products in a single week, each with a completely different background and lighting setup.

VP gives ad agencies and their clients unprecedented control over the final image.

- Instant Location Changes: A car can be shot on a winding mountain road in the Alps in the morning and on a neon-drenched Tokyo street in the afternoon, all without leaving the soundstage.

- Perfect Product Shots: The lighting and reflections on a product, which are critical in advertising, can be controlled with surgical precision using the LED Volume. The brand’s logo can be perfectly reflected on a car’s hood or a perfume bottle.

Live Events and Broadcast: Merging the Physical and Virtual

Virtual Production is breaking out of pre-recorded content and moving into the live space. By 2026, it will be used to create spectacular, mixed-reality experiences for concerts, awards shows, and news broadcasts.

This fusion of real and virtual elements will create a new level of immersion for live audiences.

- Interactive Concert Visuals: A musical artist can perform on a stage surrounded by LED walls that display dynamic, interactive visuals that react to the music and the crowd in real-time.

- Augmented Reality Broadcasts: News and sports broadcasts will utilize AR overlays to create sophisticated 3D graphics that appear to be integrated into the studio environment with the anchors. For example, a weather reporter could walk around a 3D holographic map of an approaching hurricane.

The New Frontiers: Theme Parks, Gaming, and Interactive Experiences

The technologies and assets developed for Virtual Production will have a life far beyond the screen. The line between filmmaking, gaming, and location-based entertainment will become increasingly blurred.

The digital worlds built for a movie can be repurposed to create a whole universe of related content.

- Theme Park Attractions: The real-time rendering and tracking technologies of VP are a perfect fit for creating next-generation dark rides and interactive theme park attractions, where guests are placed inside a living, breathing digital world.

- A New Era of Video Games: With filmmakers and game developers utilizing the same tools (Unreal Engine), we can expect a much deeper integration between movies and their video game tie-ins. The assets, characters, and even the lighting from the film can be directly used in the game, creating a perfectly cohesive transmedia experience.

The Democratization of Storytelling: Accessibility of VP

For all its power, the first wave of Virtual Production was defined by its exclusivity. Building and operating a state-of-the-art LED Volume was a multi-million dollar affair, accessible only to the likes of Disney, HBO, and the largest VFX houses. A key trend leading into 2026 is the gradual democratization of these powerful tools.

While the high-end will always be expensive, a new ecosystem of more affordable and accessible VP solutions is emerging, putting this technology within reach of independent filmmakers, smaller studios, and even students.

The Path to Accessibility: Lower Costs and New Solutions

Several factors are driving down the cost and complexity of Virtual Production, opening the door for a broader range of creators.

These innovations are creating a tiered ecosystem, with a VP solution available for almost any budget.

- Falling Hardware Costs: The price of high-quality LED panels, powerful GPUs, and camera tracking systems is steadily decreasing as the technology matures and manufacturing scales up.

- The Rise of Smaller VP Stages: Not every production needs a 360-degree, 80-foot diameter Volume. By 2026, a thriving market for smaller, more affordable VP stages is expected, with some models consisting of just a single large LED wall, ideal for commercials, music videos, and smaller indie film scenes.

- In-Home and “Indie” VP: The most passionate creators are building small-scale VP setups in their own garages using large-screen TVs, projectors, and game engine plugins, proving that the core principles of VP can be applied even at a micro-budget level.

- Cloud-Based VP and Virtual “Volumes”: A major trend will be the shift to cloud-based rendering. This will allow productions to offload the heavy computational work to a powerful cloud server, reducing the need for expensive on-set hardware. It also opens the door to fully virtual collaboration, where a director in Los Angeles and a DP in London can work together in a shared virtual environment.

The New Crafts: The Evolving Skill Sets of a VP Crew

The rise of Virtual Production is creating a demand for a new generation of filmmakers with a hybrid skillset that blends the artistic sensibilities of traditional filmmaking with the technical expertise of the video game industry.

By 2026, film schools and training programs will have fully integrated VP into their curricula, creating a new talent pipeline for these essential roles.

- The Virtual Art Department (VAD) is responsible for creating and managing real-time 3D environments within the game engine.

- The Brain Bar: This is the on-set nerve center of a VP shoot, staffed by technicians who operate the game engine, manage camera tracking, and ensure the entire system runs smoothly.

- The VP Supervisor: This is the key creative and technical leader who bridges the gap between the director’s vision and the technical execution, overseeing the entire VP pipeline from start to finish.

The Grand Challenges: Hurdles on the Road to Ubiquity

The path to a future where Virtual Production is the default method of filmmaking is not without its obstacles. The technology is still rapidly evolving, and the industry will face significant technical, creative, and logistical challenges in 2026 and beyond.

Overcoming these hurdles will be key to unlocking the final 10% of realism and efficiency that will make VP truly ubiquitous.

The Uncanny Valley Revisited: The Quest for Photorealistic Digital Humans

While VP is masterful at creating environments, creating a fully digital human that is indistinguishable from a real actor remains the ultimate challenge. The “uncanny valley”—the unsettling feeling we get when a digital human is almost, but not quite, perfect—is still a major hurdle. By 2026, a combination of advanced scanning, AI-driven animation, and real-time ray tracing will get us closer than ever, but achieving true photorealism for a lead character will still be incredibly difficult and expensive.

The Data Tsunami: Managing Petabytes of Digital Assets

The photorealistic digital worlds of Virtual Production are built from an enormous amount of data. A single project can involve petabytes of 3D models, high-resolution textures, and complex scene files. Managing, storing, and transferring this data presents a significant logistical challenge that necessitates a robust IT infrastructure.

The Standardization Struggle: Creating a Common Language for VP

The Virtual Production ecosystem is currently a patchwork of different software, hardware, and proprietary workflows. For the industry to truly scale, there needs to be a greater degree of standardization. Efforts like the Universal Scene Description (USD) format are a step in the right direction; however, achieving seamless interoperability between different game engines, tracking systems, and digital content creation tools will remain an ongoing challenge.

The Creative Trap: Avoiding the “Video Game” Look

One of the artistic challenges of VP is avoiding a look that feels too clean, too perfect, or too much like a video game. The real world is messy and imperfect. Experienced cinematographers and production designers are learning new techniques to “dirty up” their digital worlds, adding the subtle imperfections, grit, and texture that make a scene feel real and lived-in.

Conclusion

The growth of Virtual Production is not merely an incremental improvement in filmmaking technology, but a fundamental reimagining of the process. As we look to 2026, we see a world where the barriers between imagination and execution have been torn down. We see directors and actors working in worlds as tangible and immersive as any physical location, empowered by a level of real-time control that was once the stuff of science fiction. We see a technology that is moving beyond the confines of Hollywood blockbusters to empower a new generation of storytellers in television, advertising, live events, and beyond.

The challenges are significant, but the momentum is undeniable. Virtual Production is collapsing the old, linear pipeline and replacing it with a fluid, collaborative, and iterative canvas for creation. It is making the impossible possible, and doing so with greater efficiency, safety, and creative freedom. The story of filmmaking has always been a story of innovation, a relentless pursuit of new ways to capture light and shadow, to build worlds, and to tell stories that move us. In 2026, the next great chapter of that story will be written, not on film or on a green screen, but on the luminous, immersive canvas of Virtual Production.