Key Points

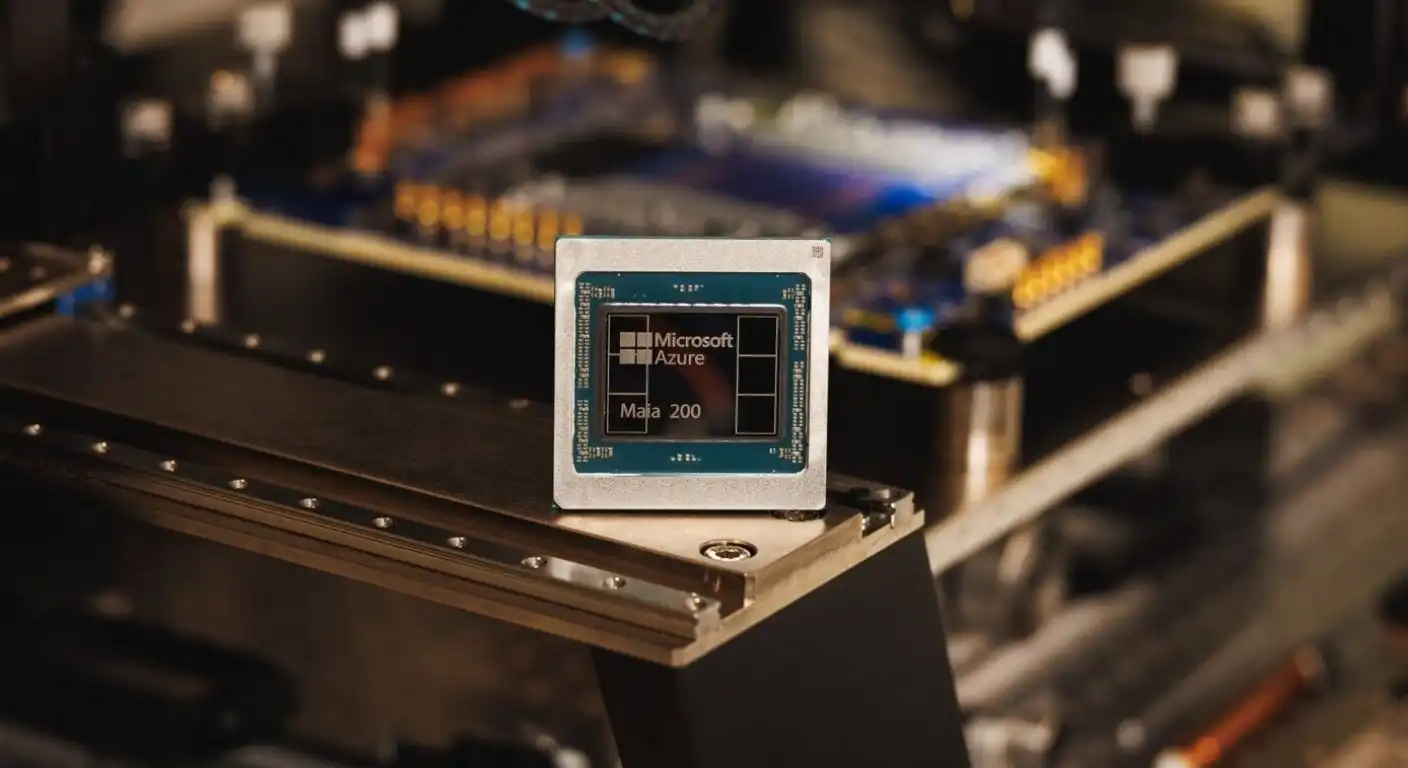

- Microsoft has unveiled its new in-house AI accelerator, the Azure Maia 200.

- The chip is designed for AI inference and is focused on efficiency and performance per dollar.

- It’s built on TSMC’s 3nm process and is claimed to be three times faster than Amazon’s Trainium3.

- The Maia 200 is significantly more power-efficient than Nvidia’s top-of-the-line GPUs.

Microsoft has just revealed its latest weapon in the AI arms race: the Azure Maia 200. This new, in-house-designed chip is the next generation of Microsoft’s custom AI accelerators, and it’s built to take on the custom silicon from rivals Amazon and Google.

The Maia 200 is all about “inference”—the process of an AI model actually answering a user’s query. Microsoft is touting it as its “most efficient inference system” ever, with a focus on delivering more performance per dollar and a lower environmental impact.

The new chip, which is built on TSMC’s advanced 3nm process, is a powerhouse. Microsoft claims it can hit up to 10 petaflops of computing performance, which is three times higher than Amazon’s latest Trainium3 chip. It also comes packed with a massive amount of high-speed memory.

While it’s hard to make a direct comparison to Nvidia’s top-of-the-line GPUs, which are designed for a much wider range of tasks, the Maia 200 does have one clear advantage: efficiency. It operates at almost half the power of Nvidia’s Blackwell B300, a major selling point at a time when the public is growing increasingly concerned about the massive energy consumption of AI data centers.

Microsoft’s messaging around the Maia 200 is all about a responsible approach to the AI boom. The company has been stressing its commitment to the communities near its data centers, and this new, more efficient chip is a key part of that strategy.

The Maia 200 has already been deployed in one of Microsoft’s Azure data centers, with more to come. It’s a clear sign that the tech giants are not just relying on Nvidia to power their AI ambitions; they are increasingly designing their own custom hardware to get an edge in the competitive AI market.