Key points

- Researchers at UC Riverside demonstrate the feasibility of creating large-scale quantum computers by connecting multiple smaller chips.

- The study shows that even imperfect connections between chips can still allow for fault-tolerant quantum computation.

- The research simulates realistic architectures, using parameters inspired by Google’s quantum infrastructure.

- The findings suggest that high-fidelity individual chips, rather than perfect interconnections, are key to scalable quantum computing.

A significant breakthrough in quantum computing has been achieved by researchers at the University of California, Riverside (UCR). Their study, published in Physical Review A, demonstrates a practical method for building large-scale, fault-tolerant quantum computers by connecting numerous smaller quantum chips.

This approach addresses a major hurdle in the field: scaling quantum systems to handle the vast amounts of data needed for practical applications. Currently, most quantum computers are too small for large-scale tasks.

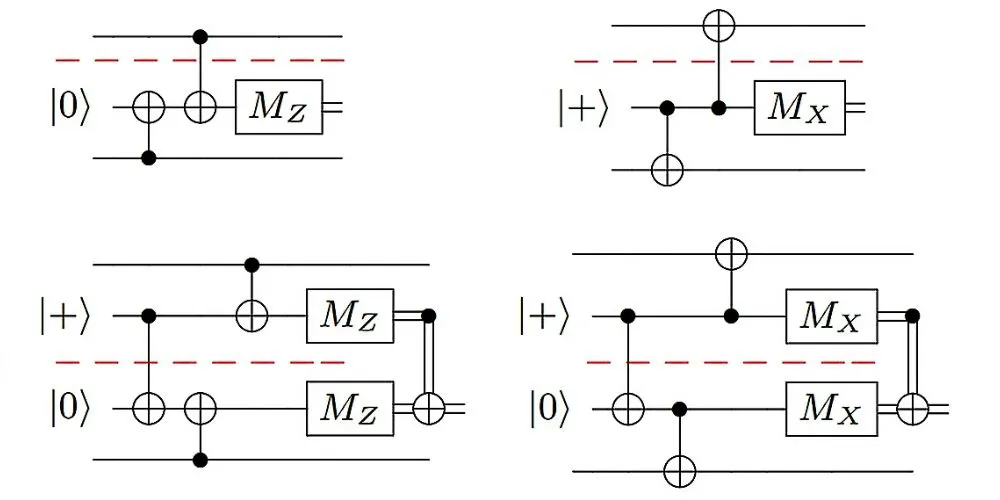

The UCR team’s research focuses on creating “scalable” quantum architectures – systems composed of many smaller chips working collaboratively.

Their simulations revealed that even with imperfect connections between these chips, a functioning, fault-tolerant quantum system can be built. This is a crucial finding, as connecting separate chips, particularly those housed in different cryogenic refrigerators, introduces noise that can hinder error correction.

The team found, surprisingly, that even with noise levels up to ten times higher than within individual chips, the system successfully detected and corrected errors.

This discovery challenges the previously held belief that flawless connections between chips are essential for scalable quantum computing. Lead author Mohamed A. Shalby, a doctoral candidate at UCR, emphasizes that the focus shifts from developing perfect hardware to ensuring high-fidelity operation within each chip.

The research utilized thousands of simulations across various architectures and connection methods, testing six different modular designs using realistic parameters based on Google’s existing quantum infrastructure.

The findings highlight the importance of fault tolerance—the ability of a quantum system to detect and correct errors automatically. This is crucial because individual “logical” qubits, the basic units of information, require numerous physical qubits to function reliably.

The research suggests that building large, reliable quantum computers is now a more achievable goal, potentially accelerating the timeline for widespread practical applications in areas such as chemistry, materials science, and data security.

The team’s work provides a concrete roadmap for the development of more powerful and usable quantum computers in the near future.