Key Points

- Generative AI tools are making it easier to create fake data and images that evade detection, challenging scientific publishing integrity.

- Researchers, publishers, and tech companies are developing AI tools to detect AI-generated content in academic papers.

- Integrity experts suspect that AI-fabricated data, including text and images, is already infiltrating scholarly research.

- Experts believe that future advancements in detection technology will eventually expose today’s AI-generated fraud.

The rise of generative artificial intelligence (AI) has sparked new challenges in scientific publishing, making it easier to produce fake manuscripts, images, and data that evade human detection. Experts in research integrity are increasingly worried about the implications of these technologies as they undermine the reliability of scholarly literature. Jana Christopher, an image-integrity analyst at FEBS Press, Germany, warns that generative AI tools are evolving swiftly, posing significant challenges for those working in publication ethics and image integrity.

AI tools capable of creating text, images, and data have generated an arms race among researchers, publishers, and tech companies. These groups are racing to develop AI-based tools capable of detecting deceptive content that may have been produced using generative AI. A forensic specialist, Elisabeth Bik, notes that while AI-generated text is sometimes accepted in scholarly journals, using AI to fabricate images or data remains unacceptable. She suggests that instances of fabricated data created by AI may already be infiltrating published research, as fraudulent paper mills exploit AI capabilities.

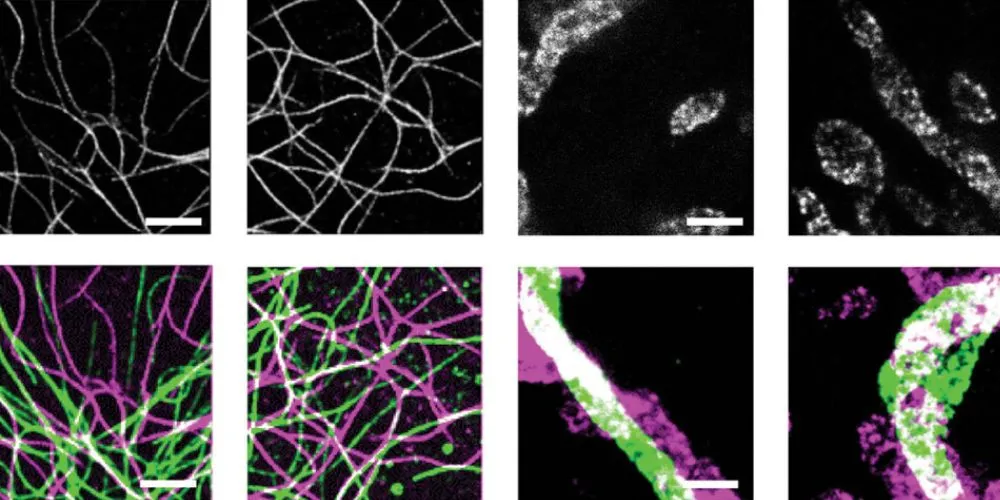

A particularly troubling development is the difficulty in detecting AI-manipulated images, which are often indistinguishable from authentic ones to the human eye. Companies like Imagetwin and Proofig have introduced software enhancements to identify AI-generated content in research images to address this issue. Proofig’s AI algorithm has shown impressive accuracy, identifying AI images with 98% accuracy and a very low false-positive rate. However, these tools remain in development and require expert verification to assess flagged images accurately.

Publishing institutions and organizations are also stepping up efforts to combat AI-facilitated fraud. The International Association of Scientific, Technical, and Medical Publishers is actively working with the STM Integrity Hub to address the rise of paper mills and generative AI-related challenges. Christopher, who leads a working group on image integrity, suggests that embedding invisible watermarks in lab-generated images could help verify authenticity.

While solutions are being explored, experts like scientific image sleuth Kevin Patrick caution that publishers may not act quickly enough to prevent a new wave of research misconduct. Despite these fears, some remain optimistic that technology will eventually catch up to identify today’s AI-generated content, ensuring that fraudulent practices won’t evade detection indefinitely.